Cambrian listing: what is the difference between AI chip and ordinary chip? All the global AI chip companies are here.

Editor’s note: This article is from Tencent Technology (ID:qqtech), written by Zach Xiaosheng, and reproduced by Entrepreneurship.

The highlight of NPU(AI chip) and GPU (common chip) is that they can run multiple parallel threads. NPU takes it to another level through some special hardware-level optimizations, such as providing some easily accessible cache systems for some truly different processing cores. These high-capacity cores are simpler than the usual "conventional" processors because they don’t need to perform many types of tasks.

On July 20th, CAMBRIAN was officially listed in A-share science and technology innovation board, becoming the first AI chip, which aroused heated discussion and concern in the industry.

According to relevant data, there are more than 1,500 IC design enterprises in China. However, there are fewer companies with AI chips, and there are only more than 20 companies. Among these AI chip companies, CAMBRIAN is the most prominent, especially the recent IPO, and the stock of Kechuang soared on the first day of listing. So what is AI chip, and how is it different from our ordinary CPU?

From the perspective of principle logic, AI processor is a special chip, which combines artificial intelligence technology and machine learning, making the mobile device of the chip smart enough to imitate the human brain, which is used to optimize the work of deep learning AI, and is also a system using multiple processors with specific functions. The ordinary chip (ordinary cpu) is packaged in a smaller chip package, which is designed to support mobile applications and provide all the system functions needed to support mobile device applications.

Most of the time, the marketing teams of major companies find the word AI (artificial intelligence) very "advanced and gorgeous", so they bind it to almost any possible commercial use. So, you must have heard of it

"Artificial Intelligence Chip" is actually a renamed version of NPU (Neuroprocessing Unit). These are special types of ASIC (Application Specific Integrated Circuits), which are designed to widely apply machine learning in the mobile market.

These ASIC have a special architecture design, which enables them to execute the machine learning model faster, instead of unloading data to the server and waiting for its response. This execution may not be so powerful, but it will be faster because there are fewer obstacles between the data and the processing center.

Generally speaking, we can understand that NPU is AI chip and ordinary chip is CPU.

The CPU will work well in a general load environment, because it has a high IPC, which can be executed through many serial ports. And the CPU follows the Von Neumann architecture, and its core is to store programs and execute them sequentially. The architecture of CPU needs a lot of space to put storage unit (Cache) and Control unit (control unit). Compared with it, the computing unit only occupies a small part, so it is extremely limited in the ability of large-scale parallel computing and is better at logical control.

The highlight of NPU and GPU is that they can run multiple parallel threads. NPU takes it to another level through some special hardware-level optimizations, such as providing some easily accessible cache systems for some truly different processing cores. These high-capacity cores are simpler than the usual "conventional" processors because they don’t need to perform many types of tasks. This whole set of "optimization" makes NPU more efficient, which is why so much research and development will be invested in ASIC.

Machine learning model processing requires CPU, DSP, GPU and NPU to be synchronized at the same time, which is because many chip processing units are working together. But it also explains why such execution is "heavy" for mobile devices.

One of the advantages of NPU is that most of the time is focused on low-precision algorithms, new data flow architecture or memory computing power. Unlike GPUs, they are more concerned with throughput than latency.

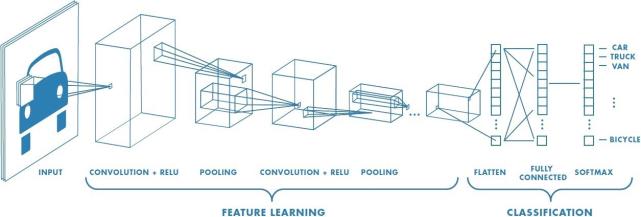

Of course, AI algorithm is very important. In image recognition and other fields, CNN convolution network, speech recognition, natural language processing and other fields are commonly used, mainly RNN, which are two different algorithms. However, in essence, they are all multiplication and addition of matrices or vector, and then cooperate with some algorithms such as division and exponent.

In addition, a mature AI algorithm is optimized for specific mathematics of convolution operation and weighted summation. This process is very fast. It is like a GPU without graphics hardware. For the AI chip, if the specific target size is determined, then the total number of multiplication and addition calculations is determined. For example, a trillion times, for example, if I run a program with an AI chip, I can have a meal, and the CPU needs to run for several weeks. No commercial company will waste time.

In addition to the CAMBRIAN, there are also these well-known AI chip companies in China, such as Bitcontinent, Horizon, Tianxin Zhixin, Yizhi Electronics, Exploration Technology, Suiyuan Technology, Hisilicon, Jianan Technology, etc., all of which have experienced the actual landing inspection period from 2015 to the present, before they get to the present situation. The products of each company are also unique, with their own styles in power consumption, performance and application scenarios, which can occupy a place in the vast market of China.

China AI chip company is in a development boom. What about the development of foreign AI chips? Now let’s look at the companies that we think are the top developers of artificial intelligence chips, although there is no specific order-just those companies that have demonstrated their technology and have put it into production or will put it into production soon. The details are as follows:

1. Alphabet (Google’s parent company)

Google’s parent company urges the development of artificial intelligence technology in many fields, including cloud computing, data centers, mobile devices and desktop computers. Perhaps the most noteworthy is its Tensor Processing Unit, which is an ASIC specially designed for Google’s TensorFlow programming framework, mainly used for two branches of AI, machine learning and deep learning.

Google’s Cloud TPU is used in data centers or cloud solutions, and its size is equivalent to a credit card, but the Edge TPU is a coin with a size less than one cent, which is specially designed for some specific devices. Nevertheless, analysts who observe this market more closely say that Google’s Edge TPU is unlikely to appear on the company’s own smartphones and tablets in the short term, and it is more likely to be used in higher-end, enterprise and expensive machines and equipment.

2. Apple

Apple has been developing its own arm chips for many years, and may eventually stop using suppliers such as Intel. Apple has basically got rid of the entanglement with Qualcomm, and it really seems determined to go its own way in the field of artificial intelligence in the future.

The company uses A13 "bionic" chips on the latest iphone and ipad. The chip uses Apple’s neural engine, which is part of the circuit and cannot be used by third-party applications. A13 bionic chip is faster and consumes less power than the previous version. According to reports, A14 version is currently in production and may appear on more mobile devices of the company this year.

3. ARM

The chip design produced by Arm (ArmHoldings) has been adopted by all prominent technology manufacturers including Apple. As a chip designer, it doesn’t make its own chips, which gives it some advantages, just like Microsoft doesn’t make its own computers. In other words, Arm has great influence in the market. The company is currently developing artificial intelligence chip design along three main directions: Project Trillium, an "ultra-efficient" and scalable new processor with the goal of machine learning applications; Machine learning processor, which is self-evident; Arm NN is the abbreviation of neural network, which is a processor for processing TensorFlow, Caffe is a deep learning framework, and there are other structures.

4. Intel

As early as 2016, according to the Wall Street Journal, the chip giant Intel announced the acquisition of the startup NervanaSystems, and Intel will acquire the company’s software, cloud computing services and hardware, so that the products can better adapt to the development of artificial intelligence. But its artificial intelligence chip series is called "neural network processor": artificial neural network imitates the working mode of human brain and learns through experience and examples, which is why you often hear that machines and deep learning systems need "training". With the previous release of Nervana, it seems that Intel will give priority to solving problems related to natural language processes and deep learning.

5. Nvidia (NVIDIA)

In the GPU market, we mentioned that GPU can handle artificial intelligence tasks much faster than CPU, and Nvidia seems to be in a prominent position. Similarly, the company seems to have gained an advantage in the nascent artificial intelligence chip market. These two technologies seem to be closely related, and NVIDIA’s progress in GPU will help accelerate the development of its artificial intelligence chip. In fact, gpu seems to be the support of Nvidia artificial intelligence products, and its chipset can be called artificial intelligence accelerator. Jetson Xavier was released in 2018, and Huang Renxun, CEO of Jetson Xavier, said at a news conference: "This small computer will become the brain of future robots".

Deep learning seems to be NVIDIA’s main interest. Deep learning is a higher level of machine learning. You can think of machine learning as short-term learning with relatively limited data sets, while deep learning uses a large amount of data collected over a long period of time to return results, which in turn are designed to solve deeper and potential problems.

6. AMD (Ultramicro Semiconductor)

Like NVIDIA, AMD is another chip manufacturer closely related to graphics cards and GPUs, partly due to the growth of the computer game market in the past few decades and the growth of the bitcoin mining industry. AMD provides hardware and software solutions, such as machine learning and deep learning of EPYC cpu and Radeon Instinct gpu. Epyc is the processor name provided by AMD for servers (mainly used in data centers), while Radeon is a graphics processor mainly for gamers. Other chips provided by AMD include Ryzen, and perhaps the more famous Athlon. It seems that the company is still in a relatively early stage in the development of artificial intelligence-specific chips, but in view of its relative strength in the GPU field, observers believe that it will become one of the leaders in this market. AMD has signed a contract to provide Epyc and Radeon systems to the U.S. Department of Energy to build one of the fastest and most powerful supercomputers in the world, which is called "Frontier".

7. Qualcomm (Qualcomm)

Qualcomm made a lot of money from the cooperation with Apple at the beginning of the smartphone craze, and Qualcomm may feel left out in the cold about Apple’s decision to stop buying its chips. Of course, Qualcomm itself is not a small company in this field, and considering the future, it has been making some major investments.

Last year, Qualcomm released a new "cloud artificial intelligence chip", which seems to be related to its development in the fifth generation telecommunications network (5G). These two technologies are considered to be the basis for building a new ecosystem of self-driving cars and mobile computing devices. Analysts said that Qualcomm is a latecomer in the field of artificial intelligence chips, but the company has rich experience in the mobile device market, which will help realize its goal of "making artificial intelligence on devices everywhere".

Of course, other large international companies, such as Samsung, TSMC, Facebook, IBM and LG, are also developing their own AI chips. Whoever can master the cutting-edge AI chips first will get a slice of the new economic rising trend.

The reality described by the marketing departments of major companies is completely different from the reality outside those companies. Although decades of research have given us new ways to process information and classify input, which is faster than ever, there is no real AI in the hardware we buy, so which chip company can seize the market pain point and realize the application first, it can gain a great advantage on the track of artificial intelligence chips.

At present, the global artificial intelligence industry is still in a high-speed development, and the distribution of different industries provides a broad market prospect for the application of artificial intelligence. The commercialized society needs the application of artificial intelligence. AI chip is the hardware foundation for realizing the algorithm, and it is also the strategic commanding height of the future artificial intelligence era, and all the top companies in the world will fight for it. However, because the current AI algorithms often have their own advantages and disadvantages, only by setting a suitable scene for them can they best play their role. I also hope that AI can enter the sight of ordinary people this morning.

From the perspective of industrial development, it is still in the primary stage of artificial intelligence chips, and there is extraordinary room for innovation in both scientific research and commercial applications. In the application scenario, the AI chip with iterative algorithm develops into a general intelligent chip with higher flexibility and adaptability, which is the inevitable direction of technology development. Less computing bit width of neural network parameters, more customized design of distributed memory, more sparse large-scale vector realization, higher computing efficiency, smaller volume and higher energy efficiency in complex heterogeneous environment, the integration of computing and storage will become the main characteristics and development trend of artificial intelligence chips in the future.

References:

1. Research Report on Investment Prospect of IC Design Industry in China in 2019.

2. The development status and trend of artificial intelligence chips [J] Science and Technology Herald

3. The picture comes from the Internet.

This article (including pictures) is reproduced by the cooperative media and does not represent the position of the entrepreneurial state. Please contact the original author for reprinting. If you have any questions, please contact editor@cyzone.cn.